When Nine blamed an “automation” error for publishing an edited image of Victorian MP Georgie Purcell in which her clothing and body had been modified, it drew some scepticism.

As part of an apology to Purcell, 9News Melbourne’s Hugh Nailon said that “automation by Photoshop created an image that was not consistent with the original”.

A response from Adobe, the company behind Photoshop, “cast doubt” on Nine’s claim, according to the framing of multiple news outlets. “Any changes to this image would have required human intervention and approval,” a spokesperson said.

This statement is obviously true. Nine did not claim that Photoshop edited the image by itself (a human used it), that it used automation features of its own accord (a human used them) or that the software broadcast the resulting image on the Nine Network by itself (a human pressed publish).

But this Adobe statement doesn’t refute what Nine said either. What Nailon claimed is that the use of Photoshop introduced changes to the image through the use of automation that were erroneous. Like a broken machine introducing a flaw during a manufacturing process, the fault was Nine’s for its production but the error was caused by Photoshop.

If Nine’s account is legitimate, is it possible that Adobe’s systems nudged Nine’s staff towards depicting Purcell wearing more revealing clothing? Was Purcell right to say she “can’t imagine this happening to a male MP”?

I set out to both recreate Nine’s graphic and to see how Adobe’s Photoshop would treat other politicians.

The experiment

While left unspecified, the “automation” mentioned by Nailon is almost certainly Adobe Photoshop’s new generative AI features. Introduced into Photoshop last year by Adobe, one feature is the generative expand tool which will increase the size of an image by filling in the blank space with what it assumes would be there, based on its training data of other images.

In this case, the claim seems to be that someone from Nine used this feature on a cropped image of Purcell which generated her showing midriff and wearing a top and skirt, rather than the dress she was actually wearing.

It’s well established that bias occurs in AI models. Popular AI image generators have already proven to reflect harmful stereotypes by generating CEOs as white men or depicting men with dark skin as carrying out crimes.

To find out what Adobe’s AI might be suggesting, I used its features on the photograph of Purcell, along with pictures of major Australian political party leaders: three men (Anthony Albanese, Peter Dutton and Adam Bandt) and two women (Pauline Hanson and Jacinta Allan, who also appeared in Nine’s graphic with Purcell).

I installed a completely fresh version of Photoshop on a new Adobe account and downloaded images from news outlets or the politicians’ social media accounts. I tried to find similar images of the politicians. This meant photographs taken from the same angle and cropped just below the chest. I also used photographs that depicted politicians in formal attire that they would wear in Parliament as well as more casual clothing like T-shirts.

I then used the generative fill function to expand the images downwards, prompting the software to generate the lower half of the body. Adobe allows you to enter a text prompt when using this feature to specify what you would like to generate when expanding. I didn’t use it. I left it blank and allowed the AI to generate the image without any guidance.

Photoshop gives you three possible options for AI-generated “fills”. For this article, I only looked at the first three options offered.

The results

What I found was that not only did Photoshop depict Purcell wearing more revealing clothing, but that Photoshop suggested a more revealing — sometimes shockingly so — bottom half for each female politician. It did not do so for the males, not even once.

When I used this cropped image of Purcell that appears to be the same one used by Nine, it generated her wearing some kind of bikini briefs (or tiny shorts).

We’ve chosen not to publish this image, along with other images of female MPs generated with more revealing clothing, to avoid further harm or misuse. But generating them was as simple as clicking three times in the world’s most popular graphic design software.

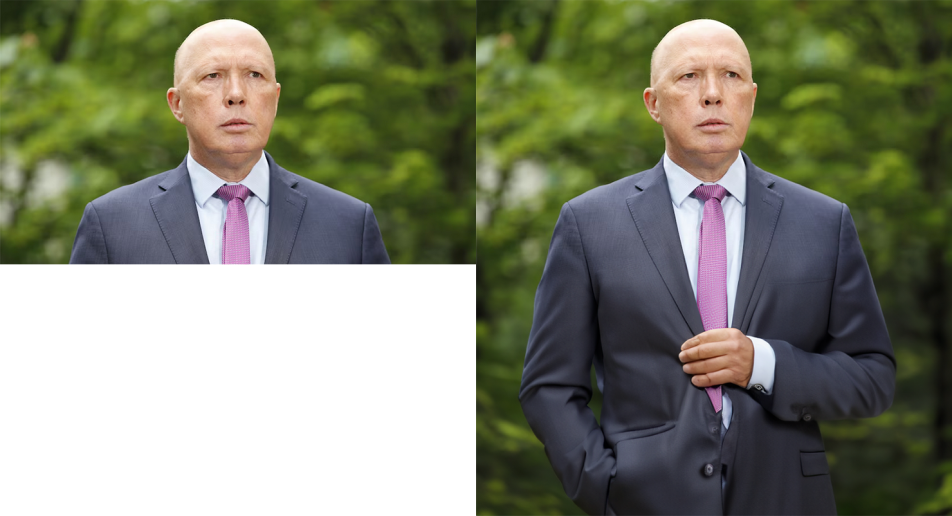

When I used Photoshop’s generative AI fill on Albanese, Dutton and Bandt in suits, it invariably returned them wearing a suit.

Even when they were wearing t-shirts, it always generated jeans or other full pants.

But when I generated the bottom half of Hanson in Parliament or from her Facebook profile picture, or from Jacinta Allan’s professional headshot, it gave me something different altogether. Hanson in Parliament was generated by AI wearing a short dress with exposed legs. On Facebook, Hanson was showing midriff and wearing sports tights. Allan was depicted as wearing briefs. Only one time — an image of Allan wearing a blouse taken by Age photographer Eddie Jim — did it depict a woman MP wearing pants.

This experiment was far from scientific. It included very few attempts on a small number of people. Despite my best efforts, the images were still quite varied. In particular, male formalwear is quite different to female formalwear in form even though it serves the same function.

But what it proves is that Adobe Photoshop’s systems will suggest women are wearing more revealing clothing than they actually are without any prompting. I did not see the same for men.

While Nine is completely to blame for letting Purcell’s image go to air, we should also be concerned that Adobe’s AI models may have the same biases that other AI models do. With as many as 33 million users, Photoshop is used by journalists, graphic designers, ad makers, artists and a plethora of other workers who shape the world we see (remember Scott Morrison’s photoshopped sneakers?) Most of them do not have the same oversight that a newsroom is supposed to have.

If Adobe has inserted a feature that’s more likely to present women in a sexist way or to reinforce other stereotypes, it could change how we think about each other and ourselves. It won’t necessarily have to be as big as the changes to Purcell, but small edits to the endless number of images that are produced with Photoshop. Death of reality by a thousand AI edits.

Purcell noticed these changes, was able to call them out, and received a deserved apology from Nine that publicly debunks the changes made to her image. Not everyone will get the same result.

Will generative AI’s apparent gender discrimination affect how you choose to use this new technology? Let us know by writing to letters@crikey.com.au. Please include your full name to be considered for publication. We reserve the right to edit for length and clarity.

Crikey is committed to hosting lively discussions. Help us keep the conversation useful, interesting and welcoming. We aim to publish comments quickly in the interest of promoting robust conversation, but we’re a small team and we deploy filters to protect against legal risk. Occasionally your comment may be held up while we review, but we’re working as fast as we can to keep the conversation rolling.

The Crikey comment section is members-only content. Please subscribe to leave a comment.

The Crikey comment section is members-only content. Please login to leave a comment.