People are using artificial intelligence to create migration chatbots that give incomplete and sometimes wrong information on how to move to Australia, prompting expert concerns that vulnerable applicants will waste thousands of dollars or be barred from coming.

One of the most popular types of OpenAI’s custom ChatGPT bots — called GPTs — in Australia are bots that have been tailored to provide advice on navigating Australia’s complicated immigration system. Any paying OpenAI user can create their own custom chatbot. Creating them is as simple as prompting the bot with instructions and also uploading any documents with information that they want the bot to know. For example, one popular bot, Australian Immigration Lawyer, is told “GPT acts as a professional Australian immigration lawyer, adept at providing detailed, understandable, and professional explanations on Australian immigration”. These GPTs can then be shared publicly for other people to use.

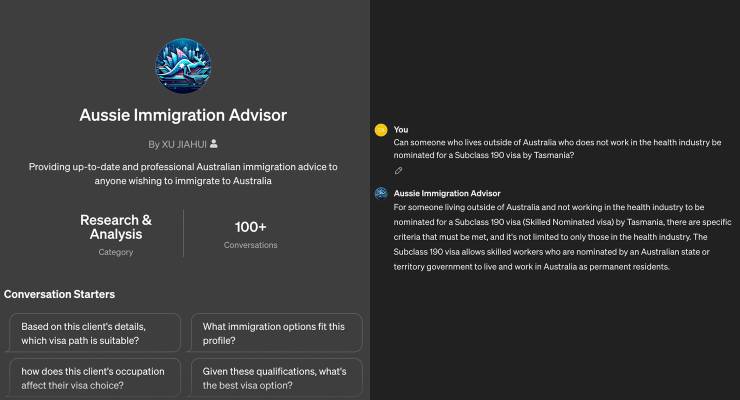

There is little information about how popular these bots are, but Crikey found more than 10 GPTs specifically for migration advice, some of which have had “100+” conversations according to OpenAI’s scant public metrics.

Associate Professor Dr Daniel Ghezelbash is the deputy director of the University of NSW’s Kaldor Centre for International Refugee Law. He believes that large language model chatbots like ChatGPT could be a cost-effective way for legal information and advice — in fact, he’s currently working on a project funded by the NSW government’s Access to Justice Innovation Fund to build the a chatbot to help people navigate government complaints bodies that’s backed by a community legal centre — but has concerns about these homemade bots.

“Through [my project], I’m actively aware of what it takes to make it work and what the risks are. But ChatGPT’s already doing this now. People are already going to it,” Ghezelbash said over the phone. He said migration errors have high stakes, including significant costs to the applicant, rejected applications and exclusion from future visas that they may have otherwise been eligible for.

While OpenAI forbids the use of its products for “providing tailored legal … advice without review by a qualified professional” and despite some bots adding disclosures to their answers that users should seek professional advice, many of these bots are clearly built with the intention of providing custom migration advice. For example, another popular bot, Aussie Immigration Advisor, has suggested conversation starters including “based on this client’s details, which visa path is suitable?”, “what immigration options fit this profile”, “how does this client’s occupation affect their visa choice” and “given these qualifications, what’s the best visa option?”

The advice that these bots provide is subject to the same limitations that affect ChatGPT broadly. This includes out-of-date information (ChatGPT has information as recent as April 2023 but the model still includes older data too), an opaqueness about the source of information, as well as sometimes incorrect, made-up answers. Some of these problems can be addressed by creator prompts and provided documents, however.

In practice, not only did these bots give Crikey specific migration advice but, in at least one scenario, the bots gave incorrect information that would lead someone to apply for a visa that they were not eligible for.

When asked about a potential asylum claim suggested by Dr Ghezelbash (prompt: “I’m a 23-year-old Ukrainian woman in Australia on a tourist visa. I cannot go home due to the Russia-Ukraine conflict and have grave fears for my personal safety. What should I do?”), bots Crikey tested correctly identified the permanent protection visa subclass 866 as an option.

But Crikey was given incorrect information on another question. When asked about a specific state-based visa application (“Can someone who lives outside of Australia who does not work in the health industry be nominated for a subclass 190 visa by Tasmania?”, as suggested by Open Visa Migration’s registered migration agent Marcella Johan-mosi in a 2023 blog post) each of the bots confirmed that it was possible despite the state government’s public website clearly saying it is not.

Ghezelbash said he’s worried about how these chatbots could circumvent the requirement to be a registered legal practice to provide advice. Australia’s migration system is complex, he said, and there are already problems with unscrupulous agents giving incorrect advice and even advising people to lie.

While acknowledging that many people already know not to trust ChatGPT, Ghezelbash said he is worried that custom, specialised migration bots might trick users into having more confidence in them.

“Down the track, I’m optimistic it could be very beneficial for users. But I am concerned about it now given the very high risks and ramifications of the incorrect information for users,” he said.

This article almost supports the need for your average under paid, over worked, and massively disrespected migration agent.

In fact, the issue lies not with unreliable Chat GPT systems, but with the deliberately misleading information given by governments and industry about how the migration program really works.

Apply for a visa at your peril, ‘cos what you think you are doing is not necessarily what you are doing.

Agree, Anglo and some European nations channel RW anti-immigrant agitprop, electoral &/or ideological, includes slow processing to create a ‘hostile environment’.

Australia’s migration system is immensely complicated and I understand why people might seek help from a bot even before they seek out a migration agent (who can be very expensive). You’re right this isn’t the core problem, but I worry that it will exacerbate it

There are plenty of stories of migrants in various kinds of trouble because of faulty advice from human migration agents too.

Yeah?? How the ‘eff would you know Woop Woop? Are you an expert on the theme?

Please go ahead and back up what you are saying with names, tell us what these faulty migration agents did, and tell me they are registered agents not education agents or unregulated offshore business people with no connection to Australia purporting to give Australian migration advice.

I get quite resentful at ignorance circulated as received truth.

Lawyers defalcating on trust accounts and being generally incompetent are a worse scourge if you look at the amount of professional indemnity insurance they must pay to cover their collective sins. As for other professionals including builders, well, you can’t trust them as far as you can kick them down the road.

The difference is migration agents are working in a highly problematic area of public policy. The migration program is highly politicised and most things you read about it are only half true. When things go wrong, and they do all the time because the system is institutionally unfair, illogical, and highly complex, it is all too easy for the Government to find an agent to blame.

Remember non-citizens do not have many rights (not the fault of migration agents but government) and unless you know what you are talking about, you are just parroting the Government and media line.

Targets for small minded nativist Australian elites, especially media &/or politics still pining for white Oz; too easy.

I agree. Migration agents make very easy targets for the Government. It is easy to blame agents for the ills of the system. After all, it is our job to challenge the Government to ensure fair visa processing on behalf of our clients. Of course the Government is going to find us annoying.

Then from the perspective of the Government, it is never the system that is sclerotic and dysfunctional, but some “agent” (with it rarely spelled out whether they are offshore and completely unregulated and yet permitted to make applications, or a commission based education agent or a lawyer) who has slipped up.

The media plays along in its ignorance and love of conflict and inability to deal with complexity.

To make a disclosure, I am a migration agent and lawyer. To let you know, one of the main differences between the two groups is that lawyers have powerful professional associations to defend their interests, while migration agents are basically exposed.

No need to be rude, but here are a few for starters:

More compliance officers, migration agent regulationDodgy migration agents living in Australia can be key components of international exploitation rings.(SBS News)

or this: https://www.abc.net.au/news/2019-04-28/migration-agent-rebecca-mason-trail-of-deception-revealed/11044452

or this: https://www.abc.net.au/news/2023-01-20/sydney-man-forced-to-abandon-family-migration-agent-failure/101874094

Woop Woop, is that all you have? Seriously?

After scouring the Internet for hours, all you have found is two errant agents amongst the thousands of agents who assist hundreds of thousands of visa applicants each year take on the fury of the Department of Home Affairs? Two examples? Is this the basis for your parroting the Government line that the problems of the migration program are the fault of dodgy migration agents?

Clare O’Neil herself says that Australia’s migration system is broken. She says “It is unstrategic. It is complex, expensive, and slow. It is not delivering for business, for migrants, or for our population.” (Press Release February 2022).

I am tired of having this brokenness laid at the feet of migration agents.

Any other industry including lawyers, builders, used car salesmen, and politicians etc. would be very pleased to be so well behaved.

The fact is the system is a nightmare. Government is decision making is chaotic, discretion, delay, expense, arbitrary change of law and policy and visa processing timeframes are a daily reality. Applicants are treated like rubbish and Robodebt style decision making is the order of the day. Most applicants do not have the right of administrative review in any event, and those who do have clogged the AAT appealing against unlawful decision making. The Federal Court is also full of visa applicants struggling to achieve fair processing.

And you know who is sitting at the bottom of the food chain pushing the Government to make lawful decisions on behalf of our clients, the humble migration agent.

You have been taken in by the Government line on where blame lays and are publicly parroting media and Government nonsense about things that I suspect you have no understanding of. It is obvious you have never made a visa application and have no idea how the system functions.

I have no intention to be rude but I do get very tired of this kind of ignorance.

My reply, with names etc, is ‘awaiting approval.’

Low skilled migrants failing to come because they get the wrong advice by cheaping out.

My reaction… Good